The latest advancements in human-like robots have led many to reflect on the future of AI, robotics and the governing ethics. Rachel Gbolaru Yr12 student from The Bishops Stortford High School explored many unanswered questions they pose whilst on work experience at Delta2020.

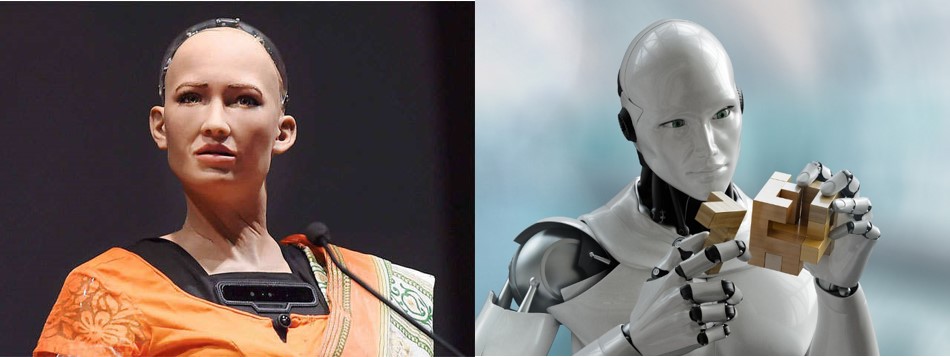

At an IT conference in late 2017, the artificially intelligent humanoid robots, Sophia, was granted citizenship by Saudi Arabia, and became the first ‘non-human’ to have a nationality. Sophia was appointed the UN’s first non-human “innovation champion.” Although most observers saw this act as more of a publicity stunt than a meaningful legal recognition, some found this gesture as openly disparaging human rights and the law. This controversial issue has raised some very important questions that are yet to be answered. Should robots be granted rights? What kind of rights should they be given?

What does it mean to give a robot citizenship?

It is unclear what rights Sophia was granted along with her citizenship, however, typically as a citizen of a country, one has the right to own property, vote in elections and even run for elected office. If we start granting citizenships to robots, will that start to trivialise these rights?

Dr Kate Darling, a researcher at MIT’s Media Lab, says that usually we award rights to people in the scope of what they are capable of. As an example, a child would not have the same rights as an adult because they are capable of less; they are not able to make all their own choices and decisions. She said, “Given that robots are still primitive pieces of technology, they are not going to be able to make use of their rights.”

Should we give robots rights?

A major study done by the University of Washington showed that humans already attribute moral accountability to robots and ‘project awareness onto them’ when they look realistic. Essentially, the more life-like and intelligent a robot appears to be, the more we will believe that they are like us – even though they are not.

Generally, what makes humans human is our capacity to feel pain, have self-awareness and be morally responsible. Humans can naturally develop these traits; machines cannot. Could it be possible to have a good citizen that is unable to feel emotions, have self-awareness or understand morals?

In 2017, the European Parliament proposed the drafting of a set of regulations in which to govern the use and creation of robots and AI and debated on whether they should grant robots “electronic personalities” to ensure rights and responsibilities for the most capable AI. MEP Mady Delvaux said that one of the reasons we needed a set of regulations was so that we can “ensure that robots are and will remain in the service of humans…” In a letter penned to the European Commission, 156 experts from 14 countries called on the Commission to reject the proposal set by the European Parliament, warning that granting robots legal personhood would “erase the responsibility of manufacturers,” and added that by seeking legal personhood for robots, manufacturers were simply “trying to absolve themselves of responsibility for the actions of their machines.”

Dr Kate Darling, said that there could be a time where we would need to think about certain “legal protection” for robots, “Not for the robot’s sake, but for our own.” The more advanced robots become, the more we as humans will start to interact with them and that can have a negative impact on our lives. For example, she says, “if it’s desensitizing to children or even to adults to be violent towards very life-like robots…then there would be an argument for restricting what people can and cannot do.”

A very prominent example of this is the Amazon Alexa, the artificially intelligent virtual assistant that can be queried about the weather, can stream news and music and that responds to voice commands to control home appliances such as lighting and more. Of course, Alexa is just one example of a smart speaker that is used. In 2018, over 9.5 million people in the UK owned a smart speaker. Despite the great benefits of having a virtual assistant that can recognise your voice and respond to your commands there is a growing anxiety when it comes to the use of these assistants in the home and the implications on people’s behaviour. There is an increasing fear, especially among parents, that children are not learning to communicate politely and considerably with their peers or the people they meet because at home, they have the authority to habitually order their smart speakers to tell them a joke, read them a story or turn the lights on or off. Some parents have noted that their children have become more impatient and irritable with access to Alexa in addition to the other technology available to them today.

This small example shows how great of an impact AI and robots can have on our lives. As robots become more advanced and are integrated more into our societies, performing the jobs that people have now and doing daily tasks for us, will the people become more intolerant, impatient and irritable? Could future generations grow up to be more demanding and ruder?

Why discuss granting robots’ rights?

Already, some robots can match and even exceed human capabilities in some areas. For example, robots can survive in places where humans cannot, deep space, the ocean and even inside radioactive reactors. Robots can translate in many languages without having to spend months or even years learning. Robots can detect diseases with much higher accuracy than humans. Robots can perform tasks quicker and more efficiently than humans. However, they lack the dexterity, intelligence and empathy of humans. Scientists and engineers predict that very soon robots will may be able to match humans when it comes to meaningful qualities such creativity, intelligence, awareness and emotions; areas that today make robots less useful than humans. Humans have always been the most advanced, most intelligent and most creative creatures; our emotional intelligence and our ability to feel and empathise with people is what sets us apart from other sentient creatures, such as animals. If another species can match our abilities with what used to be our strengths, they are no longer distinct to humanity. Should that species be able to claim rights, freedoms and protections? These questions are ones that will likely need to be evaluated if this happens.

Which robots should be given rights, and what rights should they be given?

As robots begin to takeover workplaces, the question of if robots should have worker rights arises. Will humans discriminate against these machines, thinking that because they are only brainless, thoughtless machines, it is not wrong to do so? Human workers today are eligible for holidays and at times medical benefits. Will robots be awarded the same rights?

Currently acts of hostility and violence against robots have no legal consequence. Machines have no protected legal rights; they have no feelings or emotions. However, robots are becoming more advanced and are starting to be developed with higher levels of artificial intelligence. Sometime in the future, robots may start to think more like humans, at that time, legal standards will need to change.

Recently, the European Commission launched a project to test some ethical rules for the development and application of Artificial Intelligence. 52 experts from various industries comprised the Commission’s High-Level Group on AI. In March 2019, a document constituting a draft of the AI Ethics Guidelines was submitted to the Commission with seven “key requirements” for trustworthy AI.

Some have proposed the concept of a “personhood test”, a test that can be done to prove whether a robot or AI, deserved rights. Standard measures could include a minimal level intelligence i.e. the ability to feel emotions or experience sensations, self-control, a sense of the past and future, concern for others and the ability to control one’s existence and exercise free will.

By attaining this level of sophistication, a machine may be eligible for human rights.

Some have suggested that the right for a robot to not be shut down against its will and the right to not have its source code manipulated against its will are some of the rights that could form part of a set of rights for robots.

Should robots be given rights?

Giving robots rights and treating them as humans or as an intelligent species is something that will need to be given a lot of thought as robots become more advanced and life-like. Right now, I believe that it is more important to spend our time and resources exploring the different ways that robots and Artificial Intelligence can help us now and in the near future. Researching the endless possibilities and outcomes of AI will help us get a better understanding of them and how they work, could benefit us if there ever is a time that robots need their set of rights.

Overall, the potential for robots and AI in our world is huge. They can be used in so many areas and we as the creators and designers have the power to create strongly capable machines that can perform difficult tasks. I do not believe that robots and AI can attain consciousness and start to demand their rights, however, it may be necessary to put into place certain regulations as to how we treat, care for and even dispose of robots.

Rachel Gbolaru, The Bishop's Stortford High School

Sources

https://www.pri.org/stories/2017-11-01/saudi-arabia-has-new-citizen-sophia-robot-what-does-even-mean

https://gizmodo.com/when-will-robots-deserve-human-rights-1794599063

https://nypost.com/2018/11/09/robots-will-soon-match-humans-in-creativity-emotional-intelligence/

https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artificial-intelligence/

https://phys.org/news/2017-12-robots-rights.html

https://www.seattletimes.com/business/alexa-creates-challenges-opportunities-for-parents-of-young-kids/

https://www.asme.org/engineering-topics/articles/robotics/do-robots-deserve-legal-rights

https://techcrunch.com/2019/04/08/europe-to-pilot-ai-ethics-rules-calls-for-participants/

https://ec.europa.eu/digital-single-market/en/news/draft-ethics-guidelines-trustworthy-ai